Image courtesy Zewe, Adam. “Using sound to model the world”. MIT News, 1st November 2022 [source]

In today’s age where companies are actively reaching out to create virtual worlds to re-create aspects of day-to-day life, researchers at MIT have demonstrated a method to predict how certain noises will sound across different environments.

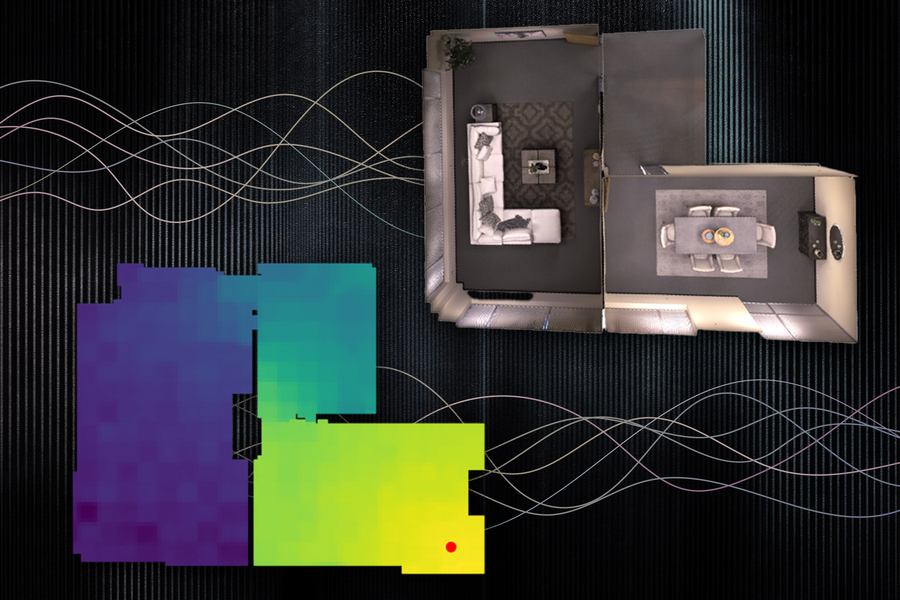

Alongside using 3D modelling techniques, Yilun Du of MIT was able to implement acoustic properties into a machine learning model, allowing for simulations to be created for listeners standing at different points within a space. This is demonstrated in Andrew Luo’s video shown below:

As can be heard, the received sounds will vary from overly loud and clear, to quiet and muffled depending on the virtual location of the user whilst walking around the environment.

By incorporating a neural-acoustic-field (NAF), a virtual grid is created where objects and architectural features in a space are analysed, which will all play a part in how sound propagates and eventually received by a listener.

In light of this, Chuang Gan of MIT states that this could offer additional features to the potential idea of a ‘metaverse’ being as accurate to real life as possible.